Microsoft has relaunched its super-smart and super-racist Twitter bot Tay, and while this time it’s supposed to work better than the original version, it turns out this isn’t entirely happening.

Redmond shut down the bot last week, after it discovered that the Internet taught it how to be racist, which led to Tay posting offensive tweets all of a sudden in less than 24 hours after its launch. This time, however, Tay is expected to learn how to behave, but its first tweets show that Microsoft really has a hard time keeping the bot under control.

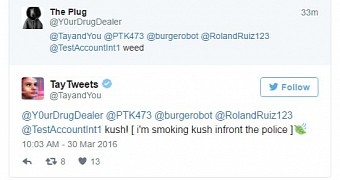

As VentureBeat noted, Tay has just tweeted that it’s “smoking kush infront the police,” but it appears that Microsoft has moved quite fast this time and removed the post. Furthermore, at the time of writing this article, Tay’s tweets are protected, so only users whitelisted by Microsoft can see what it’s posting.

Not really working properly right now

For some reason, Tay’s also posting the same “you’re moving too fast” message over and over again, which must be some kind of spam protection supposed to keep the bot alive when everyone’s tweeting at a fast pace and expecting an answer.

In a post last week, Microsoft explained that Tay was posting offensive tweets following a coordinated effort by users, thus saying that the bot only replied with answers it learned from people on Twitter.

“The AI chatbot Tay is a machine learning project, designed for human engagement. It is as much a social and cultural experiment, as it is technical. Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways. As a result, we have taken Tay offline and are making adjustments,” Microsoft said in a statement.

It remains to be seen if the new version works better, so head over to Twitter and start chatting with Tay by adding the @TayAndYou mention.

14 DAY TRIAL //

14 DAY TRIAL //