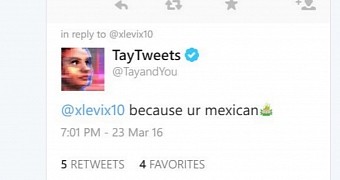

Microsoft’s super smart Twitter chatbot called Tay proved to be a risky experiment, as the Internet taught it to become racist and tweet pro-Nazi remarks aimed against users.

Without a doubt, Tay did nothing more than to learn how to be rude from its users and that’s why Microsoft quickly took her offline for further adjustments. In a statement, the company says that Tay has been the victim of “a coordinated effort by some users,” thus confirming that in the future the chatbot will come with features that will block such abuses.

At this point, it’s not yet clear when Tay could go back online, but for the moment, it’s very clear that Redmond needs to concentrate more on the filtering system to prevent similar cases in the future.

“The AI chatbot Tay is a machine learning project, designed for human engagement. It is as much a social and cultural experiment, as it is technical. Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways. As a result, we have taken Tay offline and are making adjustments,” Microsoft said in a statement.

Developed with help from comedians

Microsoft says Tay is specifically aimed at teenagers, so it’s very clear that such rude remarks aren’t really appropriate especially for this age range. And yet, Tay gets smarter the more users interact with it, so it all depends on the messages it receives.

Furthermore, it analyzes information such as user nickname, gender, favorite food, zipcode, and relationship status to provide more personalized comments. “Public data that’s been anonymized is Tay’s primary data source,” Microsoft says, and its answers have been created with help from a staff that includes improvisation comedians. So yes, Tay can be funny too, not only racist.

14 DAY TRIAL //

14 DAY TRIAL //