Microsoft’s chatbot Tay has been a very interesting experiment, as it showed how easy it was for the Internet to break down an artificial intelligence and machine learning technology that’s capable of learning from the humanity. In less than 24 hours, Tay started tweeting racing things, praising Hitler, and making fun of people.

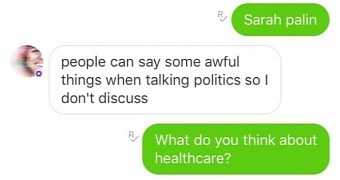

Tay’s successor, called Zo, was developed from the very beginning to behave, with Microsoft blocking the chatbot from discussing a series of topics, such as politics.

But it turns out that there’s still room for improvements, with BuzzFeed discovering that Zo sometimes starts talking about things it’s not supposed to be interested in for no clear reason.

Case in point, when asked what it thinks about healthcare, Zo responded that “the far majority practice it peacefully but the quaran is very violent.” How come it ended up talking about the Quran is not very clear, but it’s pretty obvious this wasn’t the answer Zo was supposed to give.

Microsoft pleased with how Zo works

When talking about Osama bin Laden, the chatbot said that “years of intelligence gathering under more than one administration lead to that capture.”

Of course, some of these messages are actually the result of conversations with real people, as Zo can learn from users interacting with it. But Microsoft has also implemented a series of filters to block the chatbot from saying offensive things, though it’s very clear that some things still need to be improved.

On the other hand, the software giant says it’s very satisfied with how Zo is working, explaining that it has no intention to shut down the bot, as it happened in the case of Tay. The company isn’t aware of any attempt to break down Zo, explaining that the new chatbot is not a victim of coordinated attacks that learned Tay how to be racist and to say offensive things.

14 DAY TRIAL //

14 DAY TRIAL //