Having trouble finding those hidden "monsters" inside your CPU? I'll give you a helping hand in this second part of the article. I'll even supply you with some clear "blueprints" of that intricate maze inside your CPU. Just be careful not to disturb the nasty minotaurs and dragons, OK?

We know by now that it all started with Intel 4004, which was able to handle a 4-bit registry and up to 640 bytes of memory address space. That's history. All you see now is 512 MB, 1 GB or even 2 GB of memory squeezed in spiffy memory sticks. And they'll get bigger and bigger. But what's responsible for this ever growing capacity? Could it be the latest Vista OS from Microsoft? Or the new wave of DirectX10 games? How about that video-editing, 3D graphics and sound processing software? You've got it, it's all of these. In fact, it's all about the ever complex software that aims at enabling the PC user to do more stuff in less time.

Running the latest operating system like Microsoft Windows Vista requires quite a bit of memory. Working on digital photographs or running the newest computer games requires even more. Nowadays, it is clear that every respectable PC user should have at least 1 GB of memory in order to smoothly run the majority of software applications.

CPU-Memory Interaction

We still haven't figured out how the microprocessor can keep track of all that memory capacity that put your wallet in some difficulty. To answer that, we need to talk about some of the components of a microprocessor. It's time to zoom in inside the CPU and start looking for those legendary monsters with dark powers. The first part mentioned the program counter as an important part of the CPU. This program counter is actually a sort of register. The CPU incorporates a series of hardware registers that resemble sticky notes used at reminding people of something. The CPU owes these registers a lot, because they can be easily and quickly referenced by many of the instructions the computer executes.

I'm going to get a little technical now, but it really helps you understand the complexity of a CPU. Several of these registers are dedicated to keeping track of memory addresses and are named accordingly. The program counter indicates the next instruction and the index register is used to automatically step through tables of data. The stack register keeps track of memory addresses to return from program subroutines.

I will only quickly mention the segmented and unsegmented types of memory architecture. The prevailing one is the segmented one because it was promoted by Intel and it still exists in CPUs nowadays. This type of memory architecture allows for a better and faster management of data chunks that have to be processed by the CPU.

A few words about memory protection, too. Intel's segmented architecture made it impossible for a program to delve into the memory space allotted to another application. If it tries to infiltrate, a memory fault message is generated by the operating system environment and the invading program is forced to crash. You can usually recover from this situation without having to reboot and you know exactly which program was responsible for the mess as the OS clearly indicates it.

Multitasking

Intel's 386 was the first CPU to feature hardware and special instructions that supported true multitasking. Nowadays, it is common to see several applications running simultaneously. But this is only an apparent thing, as no more than one program can be executed at any particular time. This is due to the blazing fast speeds of the actual CPUs, which can let each program run for just a short bit of time, then switch to the next program.

The 386 could stop a program in its tracks and suspend it while other programs run. Then, the OS would switch back to the first program as if nothing had ever stopped it. This is called preemptive multitasking and it's an important concept to remember for later.

Special Instruction Sets Improve the CPUs Capabilities

In 1997, Intel introduced their MMX feature with the Pentium II microprocessor. This is a trade name for Single Instruction, Multiple Data (SIMD) capability. The MMX special instruction set was primarily aimed at the emerging complex multimedia features. 3D graphics processing stormed the second half of the previous decade and Intel thought it was nice to implement some 3D processing instructions in its CPUs.

AMD countered the Intel Pentium II MMX with their 3Dnow! SIMD design which was implemented in their AMD K6 CPU's. This expanded the SIMD concept with floating point calculations that extended the range of numbers that can be crunched. Intel couldn't agree with the AMD supremacy and introduced SSE (Streaming SIMD Extensions) in its Pentium III CPUs, further improving on the MMX design. This set of instructions included registers expanding to 128-bit. In order to take full advantage of these special instructions, programmers had to write and organize their pieces of software code in a very strict format to fit into the SIMD registers. Even with all these restrictions, SIMD freed the PC as a graphics processing powerhouse that unseated the specialized graphics silicon from companies like Silicon Graphics.

RISC versus CISC

Reduced Instruction Set Computer (RISC) CPUs were introduced by a series of companies that liked to keep the instruction sets very simple. This was due to the belief that the RISC architecture could make the microprocessor perform faster by incorporating many reduced instructions. Intel and AMD chose to stick to the CISC (Complex Instruction Set Computer) architecture. CISC CPUs include a huge amount of instructions and lots of those could do some pretty complicated things. The RISC boys couldn't imagine that Intel and AMD would be able to fabricate their own microprocessors using nanometer technologies that allow CPU complexities of more than 100-million transistors and get that intricate circuitry running at gigahertz rates. The scientific community remembers that it had to migrate from RISC CPUs to CISC ones, when the road of reduced instruction sets came to a dead end. Scientists realized that the CISC CPUs are cheaper, but still powerful and they eventually became supporters of this technology.

A Pipeline Tale

The pipeline is one of those CPU fundamental features which enable several other very important speed-up schemes. Keep in mind that the pipeline architecture wasn't introduced until 1989, so the previous CPUs had more "primitive" ways to deal with processing problems.

CPUs have to get instructions and data out of the memory (read cycle) and put data back (write cycle). For this, the CPU fetches an instruction in the first phase. Then, that instruction might require a chunk of data, or even more. That means a single instruction might take two or three read cycles to get the instruction and data into the microprocessor.

Here intervenes the address bus. The microprocessor outputs the address on the address bus, and then reads the instruction. If the instruction calls for data, one or more read cycles take place. But all the while, the microprocessor has to tranquilly sit and wait for the instruction and data to show up. After the microprocessor gets all the pieces of the instruction and data, it goes to work. Some instructions may take a few steps and now it's time for the memory to stop and wait for the CPU. A pretty laggy way to process data, I would say.

The introduction of systemic processing partially solved this dubious situation. In this case, the only time the CPU had to wait was for the first instruction and data, and the only time the memory bus was idle was after sending off the last instruction of the program.

As CPUs evolved, their instructions grew up very haphazardly. Some instructions were much longer than others and the long instruction could take multiple memory read cycles to fetch. And on top of that, the size and number of the data was variable. It was time for our nice systemic process to lose it and run amok. Our generous guys from Intel came up with an ingenious idea that was immediately introduced in their 286 CPU. This was all about prefetching. From then on, there exists a little buffer memory between the memory bus and the CPU. This buffer memory is also known as a prefetch queue. The memory bus would deliver instructions to the prefetch queue and if the CPU got bogged down with a complex instruction, the next instructions would just stack up in the prefetch queue. When the CPU ran into a string of simple instructions, it would draw down the prefetch queue. Either way, both the memory bus and the CPU would run at full speed and not be held up by the other.

Intel thought that this architecture still couldn't unleash the full power of a CPU and finally introduced the pipelining concept with the 486 CPU. Here's how it works: take the systemic process and break it up into very small pieces so that each step has to do only very simple tasks. By making more steps in the pipeline, the tasks are extremely simple and even complex instructions can be broken down and executed as quickly as simple ones.

The pipeline features an interesting process that allows instructions to be predicted. The prediction is triggered when branches of complex instructions start to make their loopy way inside the CPU. In this way, the instruction address to follow will depend on the outcome of the execution of the current instructions.

If the pipeline predicts that the program flow will continue in a loop, it can fetch the next instruction in that loop. If the branch prediction turns out to be wrong, the pipeline has to be reset and part of the production line held up until the right instruction works its way down the pipeline. However, these predictions are right the majority of the time, and thus, a performance increase is realized almost every time complex branches of instructions are initiated by software programs.

Super Scalar Monster CPUs, Multithreading and Multicore Architectures

The present-day CPUs can usually house more than 31 instructions in the pipeline because some of them are taking parallel paths. That means more than one instruction per clock cycle is executed and you have a super scalar CPU. Doubling the clock speed gets you more than twice the number of instructions executed. Just combine the speed of 3 or 4 Ghz with the capacity of the pipeline and, there you have it, billions of instructions running around. (This should not to be confused with actual "parallel processing," which is the combining of two or more CPUs to execute a program).

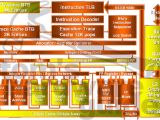

Multithreading is all about improved pipeline action. How do we improve the pipeline capacity? Simple, just add another pipeline. Doing things in parallel speeds them up pretty radically. What's really happening is the instructions are getting interleaved in the pipelines. While one pipeline is waiting for a memory fetch or something that holds up execution, the other pipeline can take advantage of the time and thus CPU latency times diminish enormously. There is a little problem though. How does the CPU sort out the bits of the program that can be run in parallel? The actual CPUs have become so complex that they can keep track of various threads of the program and put the results back together at a merge point. Now, imagine you have two or more CPUs working on multiple threads. Is that a super boost for data processing or what? Well, yes, but if you don't have the support of specialized software, you can't really appreciate it. In fact, you don't even have to imagine this. You have this technology in AMD's Athlon X2 CPUs or Intel's Core 2 ones right now. The inclusion of more than one interconnected CPU cores in one silicon chip is the present trend and the future will see more and more CPU cores in a single chip. Too many nasty little monsters for you to handle inside a common CPU? How about the map of that intricate maze I presented thus far?

That should be the CPU in a nutshell. I hope it wasn't much of a headache for you to keep track of all this information. If you did find it quite complicated, just upgrade to a multicore CPU, man! Oh, and stay tuned for more articles. The motherboard is ready to be dissected and presented to you in full detail next time.

14 DAY TRIAL //

14 DAY TRIAL //