A team of researchers from Stanford University managed to create a tool that deciphers the CAPTCHAs utilized by Wikipedia, eBay, CNN and other popular websites that rely on them to counterattack automated interactions.

“As a contribution toward improving the systematic evaluation and design of visual captchas, we evaluated various automated methods on real world captchas and synthetic one generated by varying significant features in ranges potentially acceptable to human users,” reveals the paper.

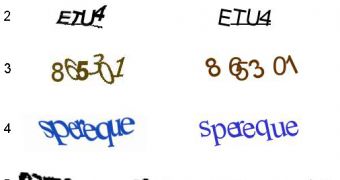

“We evaluated state-of-the-art anti-segmentation techniques, state-ofthe-art anti-recognition techniques, and captchas used by the most popular websites.”

The results of their tests show that 13 of the 15 websites they've examined are vulnerable to automated decryption attempts, highlighting the need to improve the design and principles of the codes.

Their tool called Decaptcha implements anti-segmentation techniques that clean and manipulate the images in a way that will allow an algorithm to identify each symbol.

The paper advises that CAPTCHAs should be created by utilizing black and white binarized letters as this is an efficient method to prevent their decoding using an automated program. Also, by making use of a matrix that encodes the pixel representation of letters, the challenge-response tests are also tougher to break.

While character distortion is not very effective, thinks such as a complex background, non-confusable character sets and the use of small lines, are.

Out of all the attacks, Google and Recaptcha resisted to their attempts, but others such as Blizzard, Baidu, Reddit, Digg and Megaupload, failed.

It has become clearer than ever that CAPTCHAs are not unbreakable, this latest experiments showing that website owners who want to make sure they're completely protected should take a more scientific approach to the process of creating the tests.

Some of the recommendations are not so difficult to set in place so even amateur webmasters can put them into practice to make sure their domains won't be filled with spam and other malicious elements.

14 DAY TRIAL //

14 DAY TRIAL //