Microsoft has been long touting the great possibilities that its Kinect sensor could provide, especially when combined with the Kinect for Windows SDK and a great idea. Two recent projects have come to light to demonstrate that things are indeed so.

The two projects, a Microsoft MirageTable and the Augmented Reality Sandbox by UC Davis, take full advantage of the motion sensing capabilities in Kinect for Windows and combine these with a 3D projector to deliver new experiences.

However, as can be seen in the two videos embedded below, the two projects propose quite different approaches on the use of Kinect.

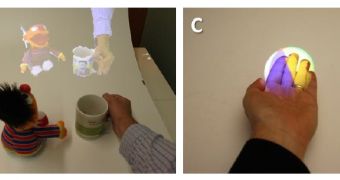

Coming from Microsoft Research, the MirageTable was designed to scan objects that have been kept in Kinect sensor’s range to create virtual projections of them.

Moreover, the idea involves keeping track of user’s eye movements, so that objects are projected from the right perspective.

“Our depth camera tracks the user’s eyes and performs a real-time capture of both the shape and the appearance of any object placed in front of the camera (including user’s body and hands),” Microsoft Research notes in a document detailing the project (PDF).

“This real-time capture enables perspective stereoscopic 3D visualizations to a single user that account for deformations caused by physical objects on the table.”

Physically-realistic freehand actions can be used to interact with the objects on the table, as can be seen in the first clip at the bottom of the article.

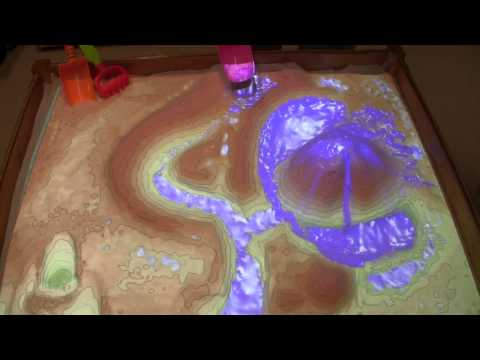

The aforementioned Augmented Reality Sandbox follows the same base principles, only that it was designed to project images on a topographic map and to overlap water flow on top.

The projection is made on a box of sand, and any changes in the surface’s structure are recorded so as to bring modifications to the projected image.

Through taking advantage of GPU acceleration, the system was designed to determine in real-time where real waterways would flow around the topography and to render the needed imagery for that. The second video below will provide you with a better idea on the project.

14 DAY TRIAL //

14 DAY TRIAL //