DreamHost, a web hosting provider that currently stores a considerable number of websites, is requiring users to edit the .htaccess file to block GoogleBot from indexing their pages. I know, I know, it might sound ridiculous but this is not the entire story. According to SeoPedia, the main reason from blocking GoogleBot is a "high memory usage and load on the server". It seems like the company's representatives sent email messages to a couple of the customers, requiring them to make their content unsearchable for spiders and crawlers.

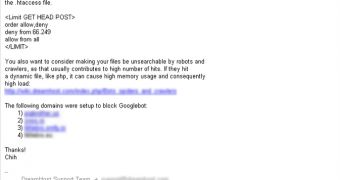

"This email is to inform you that a a few of your sites were getting hammered by Google bot. This was causing a heavy load on the webserver, and in turn affecting other customers on your shared server. In order to maintain stability on the webserver, I was forced to block Google bot via the .htaccess file. You also want to consider making your files be unsearchable by robots and crawlers, as that usually contributes to number of hits. If they hit a dynamic file, like php, it can cause high memory usage and consequently high load", the email said according to Zoso, one of the affected users.

Of course, this is the moment when I start asking questions about this ridiculous decision. Google's search engine is currently the main source of traffic for most of the hosting provider's customers. If the company blocks their most important source for visitors, it obviously loses the majority of clients. Not directly, though. Imagine that some of the website owners are using the money earned through Google's redirected visitors to pay the hosting.

Now, if the hosting provider really wants to keep its customers, it should really upgrade its systems to make them more powerful, stable and able to support the crawl process of Googlebot. So, it seems that DreamHost is rather a nightmare...

14 DAY TRIAL //

14 DAY TRIAL //