Google is always tweaking and improving its search algorithm. Recently, it's been working on weeding out poor quality results, the product of aggressive SEO. Content farms were the first target, but Google has its sights set on yet another transgressor, scraper sites.

These sites scour the web and pull in content from other places, blogs, news sites and so on, to fill their pages. They then try to game Google into getting better ranking.

There are degrees of legitimacy in the scraping business, ranging from the obvious zero-value sites that copy what they can, all the way up to aggregators.

Arguably, it could be said that Google News is a scraper site, but, of course, this is not the type of site Google is targeting.

Instead it's looking for perpetrators that copy full blog posts or articles without so much as a link back. Google has already taken steps against this type of sites, but it wants to do more. And it wants your help to do it.

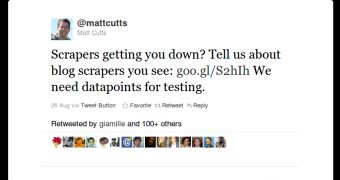

In a tweet, Google search quality czar Matt Cutts asked website owners, and everyone else, to tell Google of this type of scraper sites they know of.

"Scrapers getting you down? Tell us about blog scrapers you see: goo.gl/S2hIh We need datapoints for testing," he tweeted.

He linked to a Google Docs form which can be used to signal a case where scraper sites rank well, sometimes better than the original source. Those filing this report need to provide a query where the problem shows up, the URL to the original story and the URL to the scraped one.

Google wants as much data as it can get, presumably, to use it to discover the cases where its algorithm is failing and to test solutions that should, hopefully, fix the problem, or at least alleviate it to a degree. [via SEL]

14 DAY TRIAL //

14 DAY TRIAL //