A few days ago Google started sending out alerts, letting some websites owners know their robots.txt file contains a setting that will affect their search engine ranking.

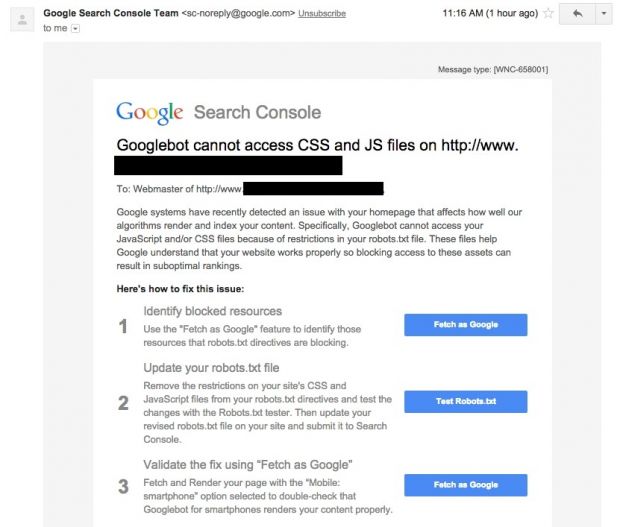

The email (see it below), informs webmasters their robots.txt file is blocking their crawler's access to the site's CSS and JS resources.

The robots.txt file is not always used, but when deployed to a website's root, it will tell the Google search crawler (often named Googlebot) what files it can go over and index.

Most of the times robots.txt files look like this:

Disallow: /file_or_folder_name

Allow: /file_or_folder_name

The next two declarations, "Disallow" and "Allow" let the search crawler mentioned via the "User-agent" option know what they can crawl, and what they can't.

Because, by default, search engines will crawl EVERYTHING, most webmasters put robots.txt files in place for controlling what's OK to index and what's not.

The robots.txt file is crucial to webmasters because it lets them block access to folders where sensitive information, code or images reside.

As Google got smarter, old robots.txt configurations are hindering content indexing operations

This in some cases includes CSS and JavaScript, resources which lately have become more important to Google.

Because in recent years Google has improved how it deals with interactive, JS-heavy, AJAX websites, and is now capable of rendering websites as perfect as the user sees them, blocking access to CSS and JS may cause problems for Google's normal way of operation.

Webmasters that have received this email should take great care in following the steps presented to them by Google, freeing up Googlebot to access CSS and JS files, otherwise they should expect a big hit on their search engine rankings as a result.

If your domain is not added to a Google Webmasters account and Google wasn't able to reach you via your Gmail account, then you can go and test your website using the Fetch as Google and robots.txt Tester resources referenced below, and add the following to your robots.txt file:

Allow: .css

Allow: .js

14 DAY TRIAL //

14 DAY TRIAL //