ICML, or International Conference on Machine Learning happening these days in Lille in France, is an annual event that focuses on machine learning. NVIDIA came at this event to announce new major features it's working on at the moment.

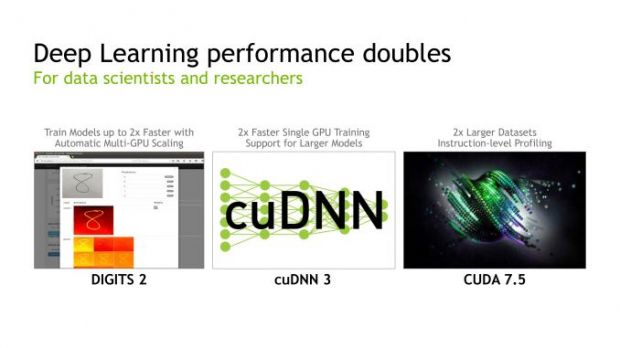

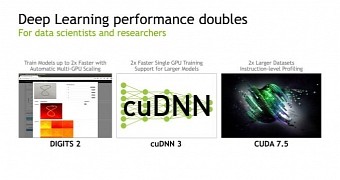

Although NVIDIA's major revenues come from selling hardware, it's also researching to create and improve three of their major libraries/environments, the CUDA, cuDNN, and DIGITS. This way Nvidia wants to make its GPU attractive for developers and other platforms to have it integrated in their systems.

The American company announced that it's laying the groundwork for neural networks and half-precision compute tasks which the company believes will be important going forward. In this regard, NVIDIA hopes that it is Tegra and Maxwell microarchitectures are already key parts of it advanced neural networks development, while the upcoming Pascal architecture will bring this new design to new heights.

CUDA 7.5

Being a half update, hence the .5, the new version received a smaller release and it mainly consisted in laying the API groundwork for the FP16. The new CUDA will bring proper support for FP16 on non-Tegra GPUs, however not in the shape of some performance benefit but rather in relieving memory pressure, and helping developers take advantage of any performance benefits from the reduced bandwidth to allow larger data sets on the same GPU memory.

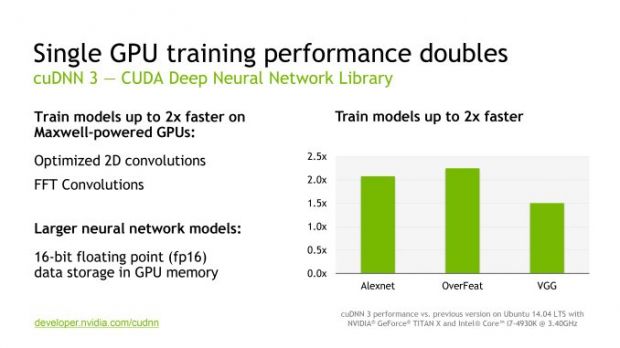

cuDNN 3

Coming from CUDA Deep Neural Network, NVIDIA's accelerated neural networking functions which now reached version 3 goes hand-in hand with CUDA 7.5, and it also supports FP16 data formats for existing NVIDIA GPUs in order to bring more efficiency and bandwidth availability for larger data sets.

cuDNN 3 will, however, bring optimizations routines for existing Maxwell GPUs to improve overall performance.

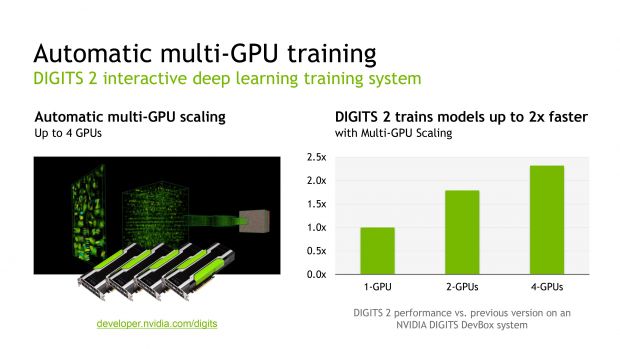

DIGITS 2

To manage all these latest NVIDIA features, DIGITS 2, the company's second middleware iteration, is meant for learning GPU training. DIGITS is actually NVIDIA's high-level neural network software for scientists and researchers that offers a complete neural network training system for those less tech-savvy users in familiarizing with neural networking.

DIGITS 2 also brings support for training neural networks over multiple GPUs, enhancing NVIDIA's previous DIGITS DevBox that built to house and manage 4x Titan Xs. Scaling issues, however, will encounter major slow-downs once performance increases go from 4 GPUs onwards. Bus traffic and synchronization issues will immediately become obvious once the number of GPUs increases.

However, on the other side of the fence, AMD's Project Quantum leaves all finesse aside and brings a "monstrous" dual-GPUs/PCB graphics card carrying dual Fury Xs with unbelievable performance. NVIDIA's DevBox may bring four GPUs in SLI, but packing the same GPU count per PCIe slot with a Quantum Fury X would amount double that GPU count. Yes, when you have 8 GPUs in one system, things might get out of control.

Unfortunately, at the moment these are only fantasies, Nvidia plans to better integrate its high-performance machines, and in doing so it hopes it will attract more client platforms for its systems.

14 DAY TRIAL //

14 DAY TRIAL //