Two French researchers have discovered a way to use the Siri and Google Now voice assistant software to relay malicious commands to smartphones without the user's consent or knowledge.

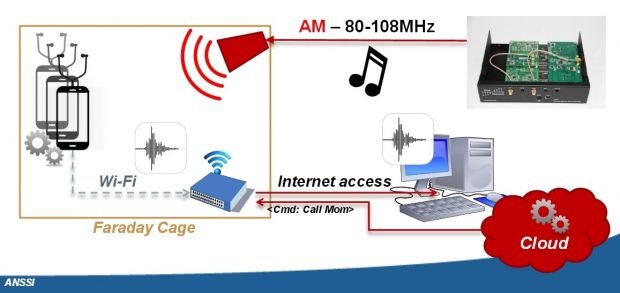

José Lopes Esteves and Chaouki Kasmi, two security researchers for ANSSI (Agence Nationale de la Sécurité des Systèmes d'Information), a French national agency dedicated to IT security, have created a special rig that consists of an antenna, a USRP radio, an amplifier, and a laptop running the GNU Radio software.

Taking over phones with Siri and Google Now

This rig is able to send radio waves at an iPhone or Android with its headphones still plugged in, using the headphone cable as a receiver that picks up the radio signals and relays them to the operating system's voice recognition software.

By employing this simple method, hackers would be able to silently force the phone to carry out malicious actions, all with the premise that the user is not looking at their screen while this is going on.

Anything Siri and Google Now, the voice assistants on iOS and Android, are permitted to do, the hacker can do as well. This includes sending texts, making phone calls, opening Web pages, installing apps, and so on.

Limitations exist, regarding rig size, range, and OS setups

There are some limitations to this attack, though, as you'd imagine. First off, it only works only when the headphones are plugged into the device, and the headphones have a microphone integrated, and aren't just simple music-listening earbuds.

Secondly, the rig is quite bulky and has a reduced attack range. If the attacker wants to use a small rig, then they won't be able to reach phones at a greater distance than 2 meters (6.5 feet). If they want to use the entire rig, then they'll need a car to hide it and move it around, and in this case, they'll be able to use it from 5 meters (16 feet). You can see how big the antenna is from the video below.

That's not the optimum scenario for taking over phones with radio waves, but things get even trickier.

The attack won't work on Google Now and Siri versions (iPhone 6s) that are set up to recognize the owner's voice. Additionally, for locked phones, the voice assistant feature must be enabled on the lockscreen.

On older iPhones, where Siri can be activated by long-pressing a button on the headphones, hackers will also need to simulate this electrical signal when sending radio waves, making the attack even more complex. Either way, the security risk still exists.

What needs to be done?

To counteract this attack vector, researchers encourage users not to leave their headphones plugged into their devices when not used. Additionally, they should also use microphone-less headphones, and turn on the voice assistance software only when needed.

For manufacturers, researchers recommend better shielding for their headphones' cords, implementing voice recognition features to all voice assistance operations, adding a sensor to detect abnormal electromagnetic activity, and they also encourage users to use custom commands to trigger the voice assistant, instead of the classic "Hey Siri" and "OK Google."

In the image below there is a basic proof of concept for their rig. Additionally, more details can be found in the Hack in Paris conference slides, along with the presentation itself (YouTube video below). Their research paper is also available on the IEEE website.

14 DAY TRIAL //

14 DAY TRIAL //