Facebook has decided to actively try to help those who are having suicidal thoughts and has begun using artificial intelligence to try to identify members that may be at risk of killing themselves.

This intention was announced last month by Mark Zuckerberg himself, so it was clear it was only a matter of time before Facebook actively began tackling this important matter.

It seems that Facebook has developed algorithms to help them spot warning signs in users' posts and the comments left by their friends. The company's human review team will look over all the flagged messages, before they work on contacting those they believe are at risk of self-harm in order to suggest ways they can get some help.

"There is one death by suicide in the world every 40 seconds, and suicide is the second leading cause of death for 15-29 year olds. Experts say that one of the best ways to prevent suicide is for those in distress to hear from people who care about them," reads Facebook's post.

Better help for those in need

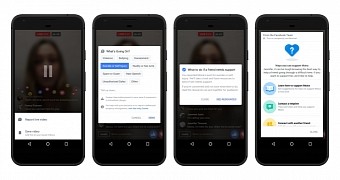

The resources offered to people who may be thinking of suicide are growing today with integrated prevention tools to help people in real time on Facebook Live, live chat support from crisis support organizations through messenger, as well as streamlined reporting for suicide, assisted by artificial intelligence.

If you see a post that makes you concerned about someone's well-being, you can report the post to Facebook or reach out to the person directly. Facebook has someone working on reviewing reports 24/7, going through them to see which are expressing suicidal thoughts.

Of course, suicide prevention tools have been built in the social network for as long as Facebook has been around, working with various mental health organizations. Last year, the availability of the latest tools went global. Now, it's just getting easier to spot those worrying reports, thanks to AI.

14 DAY TRIAL //

14 DAY TRIAL //