Mathematicians usually model uncertainty with the help of randomness. However, people have a different way of intuitively understanding it and this difference comes to the surface in various simple experiments. Two of the most well known such experiments are the Ellsberg and the Allais experiments.

In these experiments, people make apparently irrational, contradictory choices. But this verdict of irrationality is the consequence of the mathematical understanding of uncertainty as randomness. One can understand why people make their particular choices in these experiments once one understands that people do not intuitively equate uncertainty with randomness but with lack of control. We understand randomness as a universal form of lack of control (a lack of control that affects everybody), while the mere uncertainty ? a personal lack of control ? may still grant that others have control.

The Ellsberg and Allais experiments involve an experimenter that gives away some sum of money to the participants. The sum depends on the participant's choices and on "chance". Thus, such experiments offer an insight into how we're dealing with uncertainty and with "chance". The interesting result is that randomness doesn't work very well in modeling people's attitude towards uncertainty ? by using randomness, one gets paradoxical results.

People's choices in these experiments (and their rationale) can be understood by realizing that the participants assume, consciously or not, that the experimenter does not want to give them the money and that he might have some undisclosed control over what is unknown to them. In these experiments something is unknown to you but you may worry that maybe it isn't out of the experimenter's reach. This is what biases people's choices in ways that might appear "irrational". Moreover, people have a qualitative understanding of percentages, such as probabilities; they think in terms of 'small', 'medium', 'large', and are not very good at distinguishing between say 10% and 1%. I might tell you, "this option has a 89% chance, that option has a 90% chance" but that doesn't mean that you can really ponder the difference between them.

Round 1: An urn contains 300 colored marbles. 100 of the marbles are red, the remaining 200 are some mixture of blue and green. A marble will be selected at random from the urn. You will receive ?1,000 if the marble selected is of a specified color. Would you rather that color to be red or blue?

Round 2: An urn contains 300 colored marbles. 100 of the marbles are red, the remaining 200 are some mixture of blue and green. A marble will be selected at random from the urn. You will receive ?1,000 if the marble selected is not of a specified color. Would you rather that color to be blue or red?

The typical response pattern in studies of this type is to choose 'red' in both cases. This seems irrational because answer 'red' in the first round seems to imply that the participant considers the outcome 'red' more likely - i.e. as having a larger subjective probability - while the answer 'red' in the second round seems to imply that the participant considers 'blue' to be more likely.

The solution to this paradox is to understand that the participant does not assume the outcome is random. In most cases, the participant probably does this unconsciously and, as I said, such paradoxes give us a clue about how humans instinctively deal with uncertainty. We do not treat uncertainty as randomness but simply as a personal lack of control. Thus, uncertainty leaves open the possibility that others might have control where we don't.

In this case, if one assumes, consciously or not, that the experimenter does not want to give away the ?1,000 ? for instance one might think that it's not in the experimenter's interest to lose the money ? than the typical response pattern becomes rational. The participant assumes the experimenter might be able to mingle with the unknown blue/green ratio. Thus, given this assumed possibility, it is irrational to choose 'blue' (or 'green' if that option existed as well) because the experimenter might simply modify the blue/green ration in your disadvantage. You don't know the blue/green ratio ? but others might. Therefore, given the assumption, the choice of 'blue' remains irrational (or unsafe) in both rounds.

One conceivable way of changing the typical response pattern toward a more probabilistic one would be to explicitly underline that the experimenter has no power over the blue/green ratio. Another way would be to use an experimenter with whom the participant is familiar. The very fact that the experimenter is an unknown person (or a web site created by an unknown person) is relevant in this case. The typical response pattern might change considerably if the experimenter would be someone who would have some advantage if the participant won ? for example if the experimenter would be a member of the participant's family.

Round 1: You are asked to choose between two gambles. Gamble A gives you ?1,000,000 for sure. Gamble B gives you a 10% chance of winning ?1,500,000; an 89% chance of winning ?1,000,000; and a 1% chance of winning nothing.

Round 2: You are asked to choose between two gambles. Gamble A gives you an 11% chance of winning ?1,000,000 and an 89% chance of winning nothing. Gamble B gives you a 10% chance of winning ?1,500,000 and a 90% chance of winning nothing.

The typical response pattern in studies of this type is to take the sure thing (A) in the first round, and the second gamble (B) in the second. This is seen as a paradox because it comes at odds with the idea that one maximizes the expected utility, where the expected utility of a gamble between two mutually exclusive options (A and B) is:

Expected utility = p(A)?u(A) + p(B)?u(B)

where p(x) is the probability of outcome x, and u(x) is the utility of the outcome x. In this case the utility is the amount of money.

In order to explain this paradox, one usually either rejects the idea that people maximize the expected utility or rejects the formula for the expected utility. I think neither is necessary.

For one thing, the intuitive understanding of the expression "10% chance of winning" is not in terms of randomness, but in terms of lack of control ? as I argued above. The fact that one has a 10% chance of winning is intuitively understood as a limitation on the experimenter's powers to bias the experiment. The participant assumes (again, usually unconsciously) that the experimenter does not want to give him the money ? but the experimenter is assumed to be bound by certain constraints and, as a result of those constraints, he cannot have it his way all the time, in 10% of cases he loses.

The point here is that, because people understand uncertainty as lack of control, people don't understand probability, the degree of lack of control, in a linear fashion. The precision of one's ability to control something is limited. In most practical cases, 10% is equivalent to 1% ? it is simply 'small'. Thus, the intuitive understanding of the difference between 10% and 1% is not exactly trustworthy to say the least.

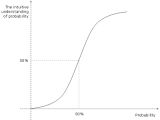

Edwin Jaynes has remarked that we are better at intuitively discerning between probabilities such as 45% and 55% than between probabilities such as 79% and 89% or 11% and 21% ? although the absolute difference between them is the same (10 percent). The intuitive understanding of probabilities as a function of the probabilities themselves is a sigmoid with the linear portion around 50% (see figure).

Thus, in round 1, gamble B, one is presented with the small possibility of winning ?1,500,000, the small possibility of winning nothing and a fairly large possibility of winning ?1,000,000. However, "gamble" A, round 1, offers the certainty of winning ?1,000,000. In gamble B the chance of winning ?1,500,000 seems so small that the risk of winning nothing, although also small, seems a pointless risk. On the other hand, in round 2, the gambles A and B seem virtually identical ? only the winnings are different. Thus, it shouldn't be so surprising that most people choose A in round 1 and B in round 2.

In other words, the mathematical calculation that leads to the Allais paradox is not very revelatory. It is absurd to compute something with 10 decimals when the measuring devices at hand are capable of a precision of only up to, say, the 5th decimal. When framing the experiment, the experimenter throws numbers at the participants but nonetheless, they still understand the problem in qualitative terms such as 'small', 'medium' and so on. The participant is a rather rough "measuring device" of probabilities. So, this paradox is a mere mathematical creation, not something that is really saying something important about human rationality ? or lack of rationality. It says nothing about the human decision making process; although it does say something about the precision of our understanding of percentages.

Conclusion

The bottom line is that people have a complex understanding of uncertainty. They don't simply use the concept of randomness as a mathematician would have it. In the wild or in society, i.e. in the environment where human rationality was devised by natural selection and where it is actually put to use, uncertainty can cover many issues.

For example, something may be uncertain because one does not know the precise intentions somebody else might have, or one does not know what means or methods somebody else is willing to employ in reaching for his goals. Such examples are much more complex than gambling problems and it is wrong to consider the theory of gambling problems as the general conceptual framework capable of handling all issues of human decision making. Rather, the situation is the other way around: the gambling problems are mere particular cases that can be handled by the human decision making system, a system that usually deals with much more complicated issues.

14 DAY TRIAL //

14 DAY TRIAL //