Voice-activated technology implemented on an increasing number of devices does not authenticate the user in any way, leaving the door open for abuse.

On smart devices, translation of sound into text commands is used to eliminate the need of keyboard or mouse input; this enables the user to literally spell commands such as sending an email, calling a contact or creating documents.

The technology is currently used on a growing number of gadgets, from smartphones to tablets, television sets or other connected devices commonly available in a household.

Unauthorized voice commands could be exploited on multiple devices

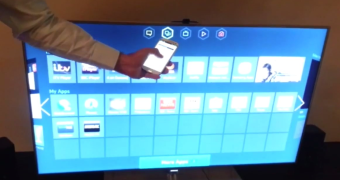

Yuval Ben-Itzhak, chief technology officer at antivirus company AVG, demonstrated in a video how an individual could perform over-the air attacks on a device relying on voice-activation technology that does not authenticate the user.

He showed that smart devices could be tricked into running commands translated from voice instructions rendered by an app on a smartphone. He said that the smart devices in his home responded to any voice command.

“The convenience of being able to control the temperature of your home, unlock the front door and make purchases online all via voice command is an exciting and very real prospect,” Ben-Itzhak writes in a blog post.

On the other hand, security is required to make this technology workable in real life. In his example, he highlights the possibility of children getting access to inappropriate TV stations as one of the immediate dangers.

Security should not be sacrificed for convenience

A deeper concern expressed by the security researcher is “being able to issue commands to connected home security systems, smart home assistance, vehicles and connected work spaces.”

In a separate video, the researcher showed that more popular devices with voice-technology implemented are vulnerable, and unauthenticated sounds can deliver commands.

The Google Now feature on Android was used to dictate the recipient’s address for an email, its subject and body, as well as to send it, all using a voice synthesizer.

“There is no question that voice activation technology is exciting, but it also needs to be secure. That means, making sure that the commands are provided from a trusted source. Otherwise, even playing a voice from a speaker or an outside source can lead to unauthorized actions by a device that is simply designed to help,” Ben-Itzhak writes.

Samples of malware leveraging this attack vector have not been found at the moment, and the security researcher is not aware of this type of attack happening in the wild. However, making sure that the voice input comes from a trusted source should be a priority for manufacturers that need to address the issue.

AVG shows unauthenticated voice input triggering a command on a smart TV:

Demonstration of Google Now used to send fake email via voice commands:

14 DAY TRIAL //

14 DAY TRIAL //