A group of investigators from the University of Washington in Seattle (UWS) announce the creation of new virtual maps showing the ancient city of Rome. The simulations were created in about 21 hours, which is a very brief period for conducting computer modeling.

The team used the equivalent of 500 computer processors to piece together some 150,000 images, and to extrapolate even more data on how the old city looked like. Some of its most remarkable landmarks were again brought to life, millennia after the Empire fell.

Steve Seitz, who holds an appointment as a professor in the UWS Department of Computer Science and Engineering, was the leader of the effort. The expert is also based at the Graphics and Imaging Laboratory.

“The idea behind 'Rome in a Day' is that we wanted to see how big of a city or model we could build from photos on the Internet,” he adds. The work is being conducted with funding secured from the US National Science Foundation (NSF).

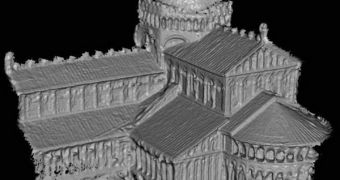

“This is the largest 3D reconstruction that anyone has ever tried. It's completely organic; it works just from any image set,” the researcher goes on to say, adding that the computer model works by producing 3D objects from 2D images of those things.

The lab team that produced the new models took to Flickr to find photos of the real landmarks, photographed in Rome. They then copied those images, and applied their program in them.

The software automatically begins to extrapolate the necessary features from each of the images, and produces a 3D representation of that particular object. The algorithm used for the software was developed by former postdoctoral student Sameer Agarwal.

“If I am a sculpture and there were three photographs of me, we would try to find three points in each photograph that point to my nose,” the inventor says.

“From that we know that there are three points in these images that correspond to a single point in the 3-D world. We would be able to say where in a particular image corresponding to that camera, the image of my nose should show up,” he goes on to say.

“This statement can be written as an equation involving the position and orientation of the camera, the position of my nose and where in the image my nose shows up,” he adds.

“And you can connect all of these equations together and solve them to, in one shot, obtain both the positions of the cameras as well as the position of my nose in the 3-D world relative to those cameras,” Agarwal says of how the algorithm function.

After the basic models – such as the one in the attached image – are complete, colors and textures are added to each individual building, and the splendor of old Rome is restored immediately.

“What excites me is the ability to capture the real world; to be able to reconstruct the experience of being somewhere without actually being there,” Seitz explains.

14 DAY TRIAL //

14 DAY TRIAL //