Google unveiled one of its most ambitious and most interesting Chrome experiments to date earlier today, an interactive film by Vincent Morisset set to the Arctic Monkey's latest single Reflektor.

The video is like nothing you've seen on the web before, but it's all based on fairly standard technologies, obviously.

At the core of the experience there are two big technologies, WebGL, which handles the GPU rendering and 3D graphics, and WebRTC's getUserMedia, which deals with the webcam capturing. The experiment also relies on WebAudio for fine-grained audio processing and WebSockets for real-time communication.

On top of those, Google used the Three.js JavaScript library, for WebGL-based 2D and 3D graphics. That's what powers all the effects and 3D objects you can create and interact with in the video. Three.js is a favorite of Google and it's quickly becoming the go-to library for hardware accelerated graphics on the web.

The Just a Reflektor experiment also relies on the Google-built, but open source Tailbone project, which makes it possible to link JavaScript applications with Google's App Engine.

A list of technologies used is fine, but Google is doing one better and also releasing all the source code behind the project, to help developers build more daring apps.

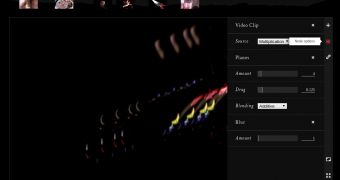

Not only that, but there's also a tool that enables you to use and customize any of the effects from the video. This makes it possible for non-developers to go behind the scenes and play with the components, something that's rarely taken into account in open source projects.

Just giving away the code doesn't make your software customizable or user friendly, even if, in theory, anyone could take the code and make any modifications they want to it. A project is much more open if it enables people to customize or play with the inner workings without having to learn JavaScript or C or anything else to do it.

14 DAY TRIAL //

14 DAY TRIAL //