Welcome to the last part of a brief history of computing. This article will concentrate on what the future of computing announces for the upcoming years, beyond common electronic circuits. Technologies involving quantum physics, chemical and DNA structures or fiber optics and light-waves promise to revolutionize the future of computers and the present article tries to have a brief look over each of them.

We know by now that the PC we have is a silicon-based digital device that mostly uses electrical signals translated in binary language (0s and 1s) in order to process information. A fully functional computer is composed of many devices that emit digital signals, called transistors. Presumably, at least 1 million transistors are required to provide processing speed for basic computational tasks.

But what do computers have in common with quantum mechanics? Well, the quantum computer still has to use some electrical signals to process data, but it also combines them with quantum mechanical phenomena such as superposition and entanglement. In a classical (or conventional) computer, the amount of data is measured by bits; in a quantum computer, the data is measured by qubits. The basic principle of quantum computation is that the quantum properties of particles can be used to represent and structure data, and that quantum mechanisms can be devised and built to perform operations with these data. The digital device computes by manipulating bits of data, that is, by transporting these bits from memory to logic gates (Boolean gates) and back. A quantum computer maintains a vector of qubits, which are not binary but rather more quaternary in nature. This qubit property arises as a direct consequence of its adherence to the laws of quantum mechanics which differ radically from the laws of classical physics. A qubit can exist not only in a state corresponding to the logical state 0 or 1 as in a classical bit, but also in states corresponding to a blend or superposition of these classical states. In other words, a qubit can exist as a zero, a one, or simultaneously as both 0 and 1, with a numerical coefficient representing the probability for each state. This may seem counterintuitive because everyday phenomena are governed by classical physics, not quantum mechanics ? which takes over at the atomic level.

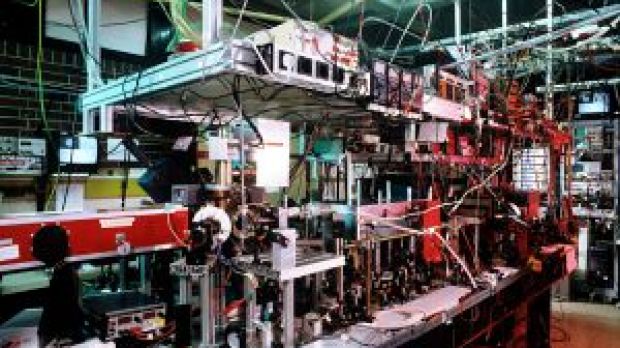

Though quantum computing is still in its infancy (theoretical predictions on quantum computing have been made ever since the 1970s), experiments have been carried out in which quantum computational operations were executed on a very small number of qubits. Research in both theoretical and practical areas continues at a frantic pace, and many national government and military funding agencies support quantum computing research to develop quantum computers for both civilian and national security purposes. It is widely believed that if large-scale quantum computers can be built, they will be able to solve certain problems asymptotically faster than any classical digital computer.

Among the unconventional computing devices, we can find the chemical computer, also called reaction-diffusion computer, BZ computer or gooware computer. This technology is based on a solid-fluid chemical "soup" where data is represented by varying concentrations of chemicals. The chemical medium is the host of natural chemical reactions, which trigger computational procedures. As with the other projects, this one is in the early stages, but it is believed that it will prove very useful to industrial machines. In 1989, it was demonstrated how light-sensitive chemical reactions could perform image processing? Andrew Adamatzky at the University of the West England has demonstrated simple logic gates using reaction-diffusion processes. Furthermore, he has theoretically shown how a hypothetical "2+ medium" modeled as a cellular automaton can perform decent computation algorithms. The breakthrough came when he read a theoretical article of two scientists who illustrated how to make computational logic gates by using the balls on a billiard table as an example. The two balls can be seen as a representation of two different bits and this is similar to a logical AND-gate. This logical gate works something like this: if two balls shoot towards a common colliding point and they eventually collide, the recorded bit is 1. Otherwise it is 0. In this way, the balls work together as an AND-gate. Adamatzkys' great achievement was to transfer this principle to the BZ-chemical and replace the billiard balls with waves. If it occurs to have two waves in the solution, they will meet and create a third wave which is registered as a 1. For the moment, Adamatzky is cooperating with some other scientists in producing thousands of chemical versions in order to produce various logic gates. The team momentarily aims at constructing a pocket computer based on their research.

Of course, the actual technology needs more tuning as it is affected by important flaws. One of these flaws is the speed of wave propagation. The waves only spread at a rate of a few millimeters per minute. According to Adamatzky, this problem can be eliminated by placing the gates very close to each other (a distance measured in microns or even millimicrons), to make sure the signals are transferred quickly. Another answer to this problem could be given by finding different chemical reactions where waves propagate much faster. An increasing number of individuals in the computer industry are starting to realize the potential of this technology. Even IBM grew interested in this technology, and it is presently testing new ideas in the field of microprocessing with many similarities to the basic principles of a chemical computer.

DNA computing is a form of computing which uses DNA and molecular biology, instead of the traditional silicon-based computer technologies. We move on to the DNA computer, which uses DNA double -helices and molecular biology to process information. It should be clear that this kind of computer is supposed to improve the existent medical technology as a primary purpose. The first to supply theoretical support and that presented practical experiments was Leonard Adleman of the University of Southern California. In 1994, Adleman demonstrated a proof-of-concept use of DNA as a form of computation which was used to solve the seven-point Hamiltonian path problem.

On April 28, 2004, Ehud Shapiro, Yaakov Benenson, Binyamin Gil, Uri Ben-Dor, and Rivka Adar at the Weizmann Institute announced in the Nature journal that they had constructed a DNA computer. Their model was coupled with an input/output module and was capable of identifying cancerous activity within a cell, and then releasing an anti-cancer drug upon positive diagnosis.

How does a DNA computer work? I assume you remember something about massive parallel computing interfaces from the second article of this series. Well, the DNA computer is fundamentally similar to that parallel interface, relying on the immense number of DNA molecules in order to predict and try different possibilities at once.

Or think of DNA as software, and enzymes as hardware. Put them together in a test tube. The way in which these molecules undergo chemical reactions with each other allows simple operations to be performed as a byproduct of the reactions. The scientists tell the devices what to do by controlling the composition of the DNA software molecules. It's a completely different approach to pushing electrons around a dry circuit in a conventional computer.

From what scientists understood so far, this kind of computer should be able to excel at certain specialized problems, being faster and smaller than any other computer built so far. However, it has been proven that DNA computing does not provide any new capabilities from the standpoint of computational complexity theory. For example, problems which grow exponentially with the size of the problem (EXPSPACE problems) on von Neumann machines still grow exponentially with the size of the problem on DNA machines. For very large EXPSPACE problems, the amount of DNA required is too large to be practical. From this point of view, quantum computers seem to be a better alternative.

Last but not least, we take a look at an optical computer. Compared to a conventional computer which uses the free electrons found in transistors to execute blazing fast calculations, the optical computer uses bound electrons in isolating crystals. The resulting digital signals are natively modulated onto a carrier light wave in the visible spectrum and no modulator or demodulator is needed, because the visible light spectrum theoretically offers 10 Thz (terraherz.) of bandwidth. It is similar to performing digital computation via radio waves.

It is interesting to point out that modern (normal) electronic computers are taking on significant radio wave properties by themselves. Since the frequency of the system clocks on fast systems has passed the single gigahertz range, circuit designers must consider that any electronic signal varying at such rates will be giving off radio waves at that frequency. This means that a wire in a computer performs the dual function of a conductor of electricity and a waveguide for a gigahertz frequency radio wave.

The optical computer should be performing its computational processes with photons or polaritons as opposed to the more traditional electron-based computation. Optical computing is a major branch of the study of photonics and polaritonics. Electronics computations sometimes involve communications via photonic pathways. Popular devices of this class include FDDI interfaces. In order to send the information via photons, electronic signals are converted via lasers and the light guided down the optical fiber. One fundamental limit for this technology has to do with size. The optical computer uses optical fibers in its microprocessor. Optical fibers on an integrated optic chip are ten times wider than the traces on an integrated electronics circuit chip. As stated before, we won?t see any transistors in an optical computer. Instead, there are crystals, which have the same cross-section as the fibers, but need a length of about 1 mm and so, are immense compared to present-day transistors. Therefore, signal traveling times will be larger and the processing speed won't be that much improved. Current crystals need light with 1 GW(gigawatt)/cm? intensity. As a typical die-size (the size of a microchip) is about 1 cm?, and some absorption takes place, this means kilowatts would be needed to power an optical chip. Keep in mind that this power is enough only for pulsed (not continuous) operation. With the near-future introduction of nanotubes, this problem could be easily solved. The biggest advantage offered by optical technologies is expected to be the synergy with existing optical telecommunication infrastructures.

Up to this date, no true optical computer is known to exist. For now, only devices that are best classified as optical switches have been tested in the laboratory. Transistor-like devices that are composed entirely of optical components are very new and experimental at best.

These are, in my opinion, the most promising technologies that could change how humans interact with machines. And all of these technologies will be somehow influenced by the adoption of nanotechnology, as well. These being said, my computer time-line can be considered concluded, but it is in no way a definite one.

From now on, the upcoming articles will present the basics and history of each computer component and each peripheral component that can be connected to a computer. I thought it would be a good idea to begin with the microprocessor, as it is considered to be the core of the computer. Until next time!

14 DAY TRIAL //

14 DAY TRIAL //