The use of cookies with an oversized header value can lead to a denial-of-service (DoS) type of condition, where the user is rejected access to a domain.

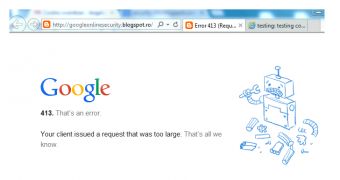

Bogdan Calin from Acunetix, a company that provides website security solutions, has found that, if a server is sent cookies with a large header line, it rejects the requests and returns the “400 Bad Request” error code; some web servers return the “413 Request Entity Too Large” error.

The server also informs of the reason that caused the denial of service, stating that the web browser “sent a request that this server could not understand. Size of a request header field exceeds server limit.”

Starting from this, Calin created a proof-of-concept page on Blogspot in Internet Explorer (other domains work in Google Chrome, too), which allowed him to create a significant amount of cookies using JavaScript, without setting an expiration date and with the domain attribute.

When a user accessed the page all would seem okay, but the browser would collect the cookies and spew them at the web server when visiting the domain again. Because the large amount of data could not be processed, the user was restricted access to any of the pages of the website.

Regaining access to the website requires deleting the bad cookies. Although this process is not complicated, it may not be the most evident course of action for the average user when encountering the denial message.

Cybercriminals could plot an attack to prevent users from visiting a targeted website. This would entail creating oversized cookies and serving them to the users.

Calin’s discovered the cookie attack after a client reported problems with security tests for certain parts of the website.

“When a specific page was visited, a cookie with a random name and a large value was set. This page had many parameters and the crawler had to request this page multiple times to test all the possible page variants. Our crawler saves all the cookies it receives in a ‘cookie jar’ and subsequently re-sends them in future requests to the same domain. After each visit to this page the cookie jar quickly contained a lot of cookies,” he writes in a company blog post.

It appears that someone else made the discovery at the beginning of the year, as Calin has also pointed out at the end of his post.

Egor Homakov, consultant at Sakurity, provides three proofs of concept, Blogspot.com being among them. He also offers a few tips directed at hackers, browsers, admins and users.

Calin has contacted Google about the problem and they have replied that a fix on the application level may not be possible.

14 DAY TRIAL //

14 DAY TRIAL //