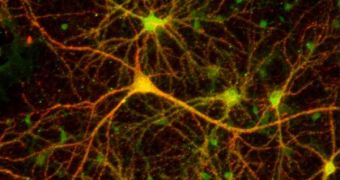

The human brain is arguably the most complex natural system in the world today. After millions of years of evolution, it grew to an impressive average size, and encompasses billions of neurons, tied together via a massive number of synaptic connections. Understanding how it functions is one of mankind's greatest pursuits, but the complexity of the organ makes attempts at deciphering its underlying mechanisms futile. Before advanced studies can be performed, experts first need to get a grip on how its basic unit, the neuron, functions, right down to the protein level.

Proteins, for example, play a huge role in changing a neuron's voltage, which is of paramount importance in electrical signal transmission. These electrical signals are what drive our thoughts, memories, association patterns, logic and movements, and they are constantly being transmitted through neural networks, several million times per second. Understanding the neuron in itself, as the basic component of the cortex, requires a balance of experimentation and computer modeling. A new effort to decipher neural actions recently began at the Northwestern University.

The inter-disciplinary partnership is led by NU McCormick School of Engineering and Applied Science professor of engineering sciences and applied mathematics Bill Kath, working together with colleague Nelson Spruston, who is a professor of neurobiology and physiology in the NU Weinberg College of Arts and Sciences. The former is in charge of developing computer models, while the latter designs the experiments to be analyzed. The team has been working together for more than a decade.

Studying neurons is however incredibly difficult, especially when it comes to voltage changes. The modifications can occur over several milliseconds or a few seconds, and so the time scale varies considerably. In order to get past this obstacle, the two researchers and graduate student Vilas Menon turned to nature for inspiration, and decided to use evolution as an investigations tool. They devised evolutionary algorithms that contain 100 models with different parameters, rather than the usual one.

Various traits that are obtained from the majority of the models can be included in a new generation, in a process known as “breeding.” The traits are mixed and matched, which, several generations later, results in obtaining the thing that was modeled in the first place – in this case, the neuron. In the new experiments, the researchers introduced a twist in the algorithms, and allowed for the structure of the model (not just its parameters) to be “mutated” during the “breeding.”

“In the end, the computer found a quite simple state-dependent model for the sodium channels that provides a very accurate behavior on short time scales and out to several seconds, as well,” Kath says. “We want to make sure we truly understand how these channels work by building a model that can recapitulate all the features we've observed. Making computer models is a way of identifying both what you understand and also where the gaps in your knowledge need to be filled,” adds Spruston.

“If you want to understand how this neural circuit is processing information and memory, you have to understand how these neurons behave in different situations. If you leave out key details, you might miss something important,” Kath concludes. The team's results were recently published in the renowned journal Proceedings of the National Academy of Sciences (PNAS).

14 DAY TRIAL //

14 DAY TRIAL //