All the people in the world may have originally had a common language, reveal experts who recently analyzed the amount of information contained in arrangements of words. They explain that this amount is consistent across Earth's languages.

The correlation even holds true when taking into account languages that have nothing to do with each other, in the sense that they are not related. It's this consistency that made scientists propose a common ancestor for all the Earth's languages.

Another explanation for this may be the way in which the human brain processes speech. It could be that the languages developed as a result of this trait, without people ever being aware of the connection.

Details of the investigations that led to this conclusion are published in the May 13 issue of the peer-reviewed journal PLoS ONE, which is edited by the Public Library of Science, Wired reports.

The meaning of languages, expert say, resides in both the words people speaking them choose to use, as well as in the order the aforementioned words are used. In some languages, the amount of data encoded in each of these two variables differs, but the overall process is the same.

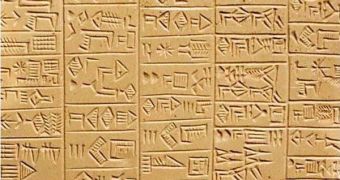

“It doesn’t matter what language or style you take. In languages as diverse as Chinese, English and Sumerian, a measure of the linguistic order, in the way words are arranged, is something that seems to be a universal of languages,” explains Marcelo Montemurro.

The expert, who holds an appointment as a systems biologist at the University of Manchester, in England, is the lead author of the PLoS ONE paper. He is also the first to realize that the amount of data encoded in each language can be analyzed by calculating the latter's entropy.

Together with paper coauthor Damián Zanette, who is based at the National Atomic Energy Commission, in Argentina, the expert analyzed how much data is encoded in English, French, German, Finnish, Tagalog, Sumerian, Old Egyptian and Chinese.

“If we destroy the original text by scrambling all the words, we are preserving the vocabulary. What we are destroying is the linguistic order, the patterns that we use to encode information,” the expert says.

What the researchers deemed really interesting was that fact that the differences in entropy between all the original and the scrambled texts were consistent across all 8 languages. “We found, very interestingly, that for all languages we got almost exactly the same value,” Montemurro explains.

“For some reason these languages evolved to be constrained in this framework, in these patterns of word ordering,” he goes on to say. One of the implications of this could be that the universal properties of language may in fact only show up at high levels of organization.

14 DAY TRIAL //

14 DAY TRIAL //