A search engine represents a technology that gathers weblinks, images and other files from pages located in the entire world and displays them when a user types a keyword that matches certain information stored in the database. Some of the most popular search engines on the internet are Yahoo, Ask or Live Search but the most powerful is surely Google because it contains the most well developed technology based on the largest database of websites from the entire internet world.

Many webmasters are trying to use multiple SEO (search engine optimization) techniques meant to help a search engine index and crawl their websites better, as well as to obtain a higher rank in the PageRank of every search technology. For example, many photos can be found with a "Picture00001.jpg" name but the title must describe exactly the object shown in the picture, representing one of the most popular tricks for the search engines.

Out there on the Internet, there are a lot of SEO experts that are publishing multiple tips and tricks to improve your PageRank position but you must know that every search engine owns different tips and tricks so using an incorrect technique can get your website removed from the index. That's why many search engine providers are currently offering detailed documentation that can guide a webmaster to optimize his website.

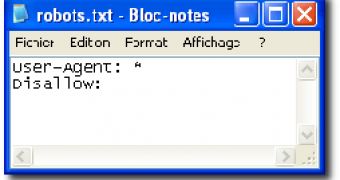

One of the most important elements in search engine optimization is represented by the robots.txt file, a tricks that is often avoided by the majority of users because it is regarded as a difficult way to improve the index process. Robots.txt is a simple file that contains all the information for the search engine bot that will crawl your website and will gather all the details directly from the document.

"The key is a simple file called robots.txt that has been an industry standard for many years. It lets a site owner control how search engines access their web site. With robots.txt you can control access at multiple levels -- the entire site, through individual directories, pages of a specific type, down to individual pages. Effective use of robots.txt gives you a lot of control over how your site is searched, but it's not always obvious how to achieve exactly what you want. This is the first of a series of posts on how to use robots.txt to control access to your content," Dan Crow, Product Manager at Google, sustained in a blog post.

The file allows you to customize the entire index process, allowing you to specify the links that you want to be crawled, as well as place multiple restrictions for the files or logs you want to keep private.

"However, you may have a few pages on your site you don't want in Google's index. For example, you might have a directory that contains internal logs, or you may have news articles that require payment to access. You can exclude pages from Google's crawler by creating a text file called robots.txt and placing it in the root directory. The robots.txt file contains a list of the pages that search engines shouldn't access. Creating a robots.txt is straightforward and it allows you a sophisticated level of control over how search engines can access your web site," the Google employee also mentioned.

If you want to read the entire blog post published by the Google employee, you should follow this link.

14 DAY TRIAL //

14 DAY TRIAL //