You know how you can never figure out how to caption your pictures when uploading one to the Internet? Well, Google has come up with a solution for that, too.

The company has revealed a new captioning system that can recognize the content of photos and automatically tag them with descriptions using natural language, something that the company has obviously been working hard on.

As Google points out, people can summarize complex scenes quite easily, using just a few words, but this is a much more difficult job for a computer. Even so, Google thinks it’s managed to get a little closer, developing a machine-learning system that can automatically produce captions to accurately describe images the first time it sees them.

It’s not just to help you tag your Facebook photos, mind you, but it’s actually something that could end up helping visually impaired people understand pictures, while also making it easier to search on Google for images.

“Many efforts to construct computer-generated natural descriptions of images propose combining current state-of-the-art techniques in both computer vision and natural language processing to form a complete image description approach. But what if we instead merged recent computer vision and language models into a single jointly trained system, taking an image and directly producing a human readable sequence of words to describe it?” writes the company’s Research Scientists Oriol Vinyals, Alexander Toshev, Samy Bengio, and Dumitru Erhan.

How does it work?

They explain that the idea comes from recent advances in machine translation between languages, where a Recurrent Neural Network (RNN) transforms a French sentence into a vector representation, for instance, and then a second RNN uses that vector to generate a target sentence in German.

They theorized that replacing the first RNN and its input words with a deep Convolutional Neural Network (CNN) trained to classify objects in images could help them achieve their goal.

“Normally, the CNN’s last layer is used in a final Softmax among known classes of objects, assigning a probability that each object might be in the image. But if we remove that final layer, we can instead feed the CNN’s rich encoding of the image into a RNN designed to produce phrases. We can then train the whole system directly on images and their captions, so it maximizes the likelihood that descriptions it produces best match the training descriptions for each image,” the scientists write.

They’ve already experimented with the system on various openly published datasets, including Pasca, Flickr8k, Flickr30k and SBU. At this point the system is far from perfect, but it does the job on most occasions.

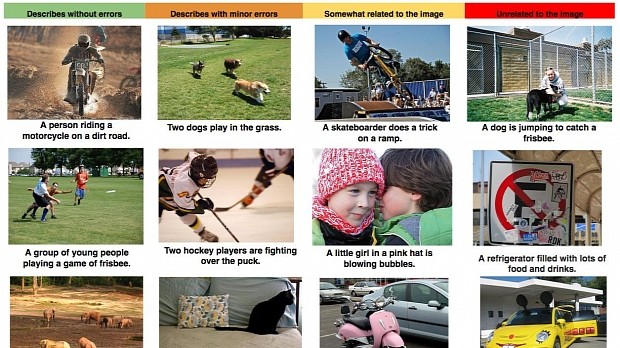

There’s a section of evaluation results, which you can check out in the gallery, where you can see some of the instances when the system described images without errors, or when the tag was completely unrelated.

14 DAY TRIAL //

14 DAY TRIAL //