It's not much of a secret that the geeks at Google love data and the more the better. Now, Google is making available some of the data it has gathered with its Books project and it's quite a treasure trove of information. A team of researches analyzed some 5.2 million books and 5 billion words looking at how often words and phrases have been used for the past several hundreds of years.

"Scholars interested in topics such as philosophy, religion, politics, art and language have employed qualitative approaches such as literary and critical analysis with great success," Jon Orwant, Engineering Manager for Google Books, writes.

"As more of the world’s literature becomes available online, it’s increasingly possible to apply quantitative methods to complement that research," he said.

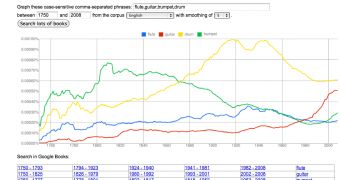

"So today Will Brockman and I are happy to announce a new visualization tool called the Google Books Ngram Viewer, available on Google Labs," he announced.

The tool is based on work analyzing the books Google has digitized to date. 15 million volumes have been scanned and are now preserved in digital form by the company.

Of those, 5.2 million books in Chinese, English, French, German, Russian and Spanish were used to create a huge data set of phrases, of up to five words each, noting how often they show up each year.

With Google Books' archive spanning back hundreds of years, the volume of data is quite impressive. Researchers are now able to conduct all manner of studies on the data which would have been otherwise impossible.

Google is providing a tool, the Ngram Viewer - which is actually quite fun, to quickly search through and visualize the data. But it is also providing the raw data for those interested.

"We’re also making the datasets backing the Ngram Viewer, produced by Matthew Gray and intern Yuan K. Shen, freely downloadable so that scholars will be able to create replicable experiments in the style of traditional scientific discovery," Orwant said.

14 DAY TRIAL //

14 DAY TRIAL //