The next-generation Firefox JavaScript engine is here. Or rather, the next-generation JIT (just-in-time) compiler, the IonMonkey. IonMonkey is replacing or complementing JägerMonkey, the current JIT in Firefox.

The novelty that IonMonkey brings to the table is a new step in the compile process, plus a few more advanced techniques for determining what to compile.

IonMonkey is now enabled by default in Firefox 18, which means it will be landing in the stable channel in January 2013.

"SpiderMonkey has a storied history of just-in-time compilers. Throughout all of them, however, we’ve been missing a key component you’d find in typical production compilers, like for Java or C++," Mozilla engineer David Anderson wrote.

"The old TraceMonkey, and newer JägerMonkey, both had a fairly direct translation from JavaScript to machine code," he said.

IonMonkey adds a third step. JavaScript code is first "translated" into an intermediate representation. The intermediate representation (IR) is the one that gets optimized by various algorithms.

An advantage of this approach is that Mozilla can modify and add new optimization algorithms to the engine and see how they work without modifying the other components, which means IonMonkey can get better, potentially a lot better over time. At any rate, it's easier to maintain and improve.

In the final step, the optimized IR gets converted into machine code which is essentially as fast as native code. Obviously, a new JIT isn't much of an improvement if it isn't actually faster. Luckily, it is.

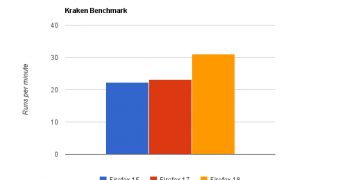

In Mozilla's tests, IonMonkey is noticeably faster than the current JIT, depending on the benchmark. Mozilla's own Kraken benchmark shows a 26 percent improvement from Firefox 17 to Firefox 18 which is now in the Nightly channel.

In V8, Google's benchmark that is being replaced by Octane, Firefox 18 is 7 percent faster than Firefox 17, and 20 percent faster than Firefox 15. But it will get even faster as it will continue to see improvements now that the main framework is finalized.

14 DAY TRIAL //

14 DAY TRIAL //