In the past few weeks, Facebook cranked its spam busting algorithm up a notch. It turns out it was a notch too many, as plenty of app developers complained about getting banned and having their apps deleted without any prior warning.

Facebook has acknowledged this and apologized for the problem. It is also issuing a number of changes and policy updates to make sure that this sort of thing doesn't happen again.

"We recently launched some changes to those systems that over-weighted certain types of user feedback, causing us to erroneously disable some apps," Facebook explained.

"While we quickly re-enabled those apps, we realize that any downtime has a significant impact on both our developers and users," it added.

Facebook is introducing several changes and new features to its enforcement system which are designed to both make sure that users have a pleasurable experience, but also that developers don't have their apps cut off at a whim.

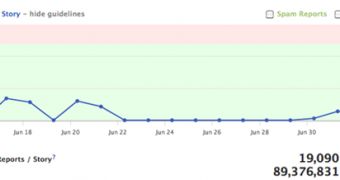

One change is that developers will now be able to know what Facebook users think of their apps. They will get access to Likes data, comments, clicks and so on, but also to the number of 'hides' or 'Mark as spam' their apps get.

Another change is that Facebook will now only disable the channel that is getting the most negative reviews, if an app is too aggressive about posting on its users friends' walls, Facebook may only disable this ability.

Finally, if an app gets a lot of negative feedback, it is no longer deleted outright, but is put in "disable" mode. This means that the app becomes inaccessible to users, but developers can continue to manage it, see Insights data and make changes.

Facebook has also announced an upcoming system in which developers with proven records and positive feedback will get access to more ways of promoting their apps.

"In the coming months, we will be moving from per-channel enforcements to a more sophisticated ranking model where the amount of distribution that content gets will be a direct function of its quality," Facebook announced.

"Good content will be seen by more people, poor content will be seen by fewer people (and potentially no one). We think this is the right long-term model, as it rewards apps that focus on great social experiences while minimizing negative experiences," it explains.

14 DAY TRIAL //

14 DAY TRIAL //