A team of investigators from the Salk Institute for Biological Studies brought science a step closer towards understanding the language of the brain. Figuring out how neurons communicate with each other to underlie capabilities such as sight has been a major goal of research for centuries.

The basics of sight are fairly well known – light enters the eye and excites receptor cells on the retina. The information photons carry is transferred to electrons, which are then sent via nerve cells called neurons to the visual cortex at the back of the head.

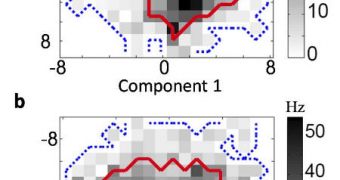

But what experts could never establish was precisely how cells on the retina encoded the information we saw. This line of search was made more complex because neurons have the tendency to respond in highly nonlinear manners.

This makes it very tough for scientists to tease out communication patterns. But Salk physicists managed to elaborate a general mathematical framework that may be used to gain more insight into this issue. The model makes perfect use of the limited measurements we have available.

One of the most important findings in the new study is that the only data the eyes relay to the brain are those about pairs of temporal stimulus patterns. The team published details of its findings in the latest issue of the peer-reviewed journal PLoS Computational Biology.

“We were surprised to find that higher-order stimulus combinations were not encoded, because they are so prevalent in our natural environment,” explains Tatyana Sharpee, PhD, the leader of the study.

“Humans are quite sensitive to changes in higher-order combinations of spatial patterns. We found it not to be the case for temporal patterns. This highlights a fundamental difference in the spatial and temporal aspects of visual encoding,” she adds.

Sharpee holds an appointment as an assistant professor at the Salk Computational Neurobiology Laboratory. She is also the Helen McLorraine Developmental Chair in Neurobiology at the Institute.

“Up to this point, most of the work has been focused on identifying the features the cell responds to. The question of what kind of information about these features the cell is encoding had been ignored,” adds Salk graduate student Jeffrey D. Fitzgerald, the first author of the new paper.

“The direct measurements of stimulus-response relationships often yielded weird shapes, and people didn't have a mathematical framework for analyzing it,” he adds, quoted by ScienceDaily.

Thus far, experts learned that the eyes transmit individual bits of information to the brain, such as for example the motion, brightness, position, color and shape of an object we see. Each of these data excites a particular type of receptor cell on the retina.

“Biological systems across all scales, from molecules to ecosystems, can all be considered information processors that detect important events in their environment and transform them into actionable information,” Sharpee explains.

“We therefore hope that this way of 'focusing' the data by identifying maximally informative, critical stimulus-response relationships will be useful in other areas of systems biology,” she concludes.

14 DAY TRIAL //

14 DAY TRIAL //