Even with all the progress made in terms of processor integration, software continues to be divided into programs best run on x86 chips and programs that run better on highly parallel GPUs.

AMD does not exactly like how computers work nowadays, from this perspective. That is why, last week, it held a press conference in which it revealed the hUMA technology, or heterogeneous Uniform Memory Access.

It is something that will bring its Heterogeneous Systems Architecture (HSA) to the fore, and build upon the strides made in general purpose GPU (GPGPU) software and development.

Long story short, with hUMA, the CPU and GPU share a single memory space, allowing GPUs to directly access CPU memory addresses.

This lets GPUs to read and write data that the CPU is also processing.

Since hUMA is a cache coherent system, the CPU and GPU will always see a consistent view of data in the RAM, meaning that if the GPU changes something there, the CPU will know, and vice versa.

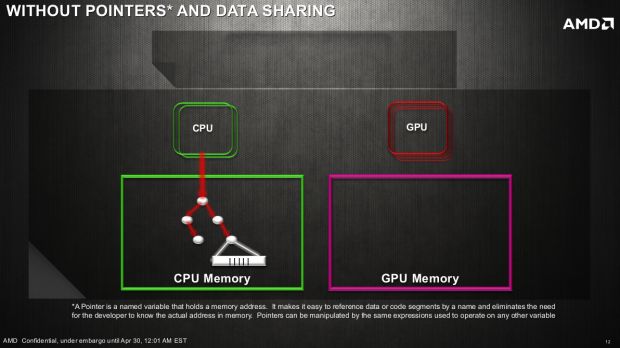

For comparison, in normal systems (demand-paged virtual memory systems) data has to be copied from the CPU's memory to the GPU's memory before the latter can do anything with it.

This copying often happens in hardware independently of the CPU. An efficient but ultimately limited approach, as it cannot do anything with memory that has already been written out to disks.

To access virtual addresses written out to disk, the CPU calls the OS to retrieve the data and put it into the RAM.

With hUMA, the GPU can access the CPU's addresses, and also use the CPU's demand-paged virtual memory.

Basically, the GPU is now allowed to try and access an address that was written out to disk, at which point the CPU will immediately load it into memory.

Ultimately, this leads to a much faster and smoother switch between CPU-based computation and GPU-based computation.

14 DAY TRIAL //

14 DAY TRIAL //