Microsoft Research is one step closer to enabling human realistic representations as alter egos for computers, complete with complex details such as facial gestures, in order to make users feel a natural interaction when talking to what essentially is nothing more than a machine.

Human – computer interaction models, especially related to emerging form factors, is changing rapidly.

Just a few years ago, touch was reserved only for select computing paradigms, while at the time of this article a range of devices leverage it as their primary input model, with consumers seeming perfectly capable to forget about traditional keyboards and mice.

Certainly there’s more way than one to interact with computers, and the software giant indicates that the future belongs to NUIs (natural user interfaces).

During Microsoft’s annual TechForum hosted by Craig Mundie, Chief Research and Strategy Officer, Zhengyou Zhang, Principal Researcher, Microsoft Research, demonstrated a new 3D Photo Realistic Talking Head.

Users will be able to find the demo featured in the video embedded at the bottom of this article.

“This was one of the technologies on show at Craig Mundie’s TechForum earlier this week,” revealed Steve Clayton, Editor, Next at Microsoft Blog.

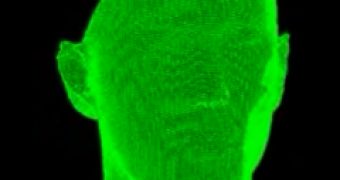

“Zhengyou Zhang takes us through a demo of the talking head – usual approaches are a 2D talking head (used for tech support on many sites) or a 3D avatar. This approach takes the best of the two worlds, taking a 2D photorealistic video and paste this on to a 3D mesh model that is built from a Kinect sensor or webcam.

“In the demo, Zhengyou Zhang uses text to speech (TTS) to “drive” the 3D head in real-time and explains that over time, the 3D head could recognize your facial expression and react in real-time to those.”

The applications for the technology can be multiple, from avatars on steroids to giving computers a human form in order to ease interaction, and to enhancing telepresence solutions.

14 DAY TRIAL //

14 DAY TRIAL //